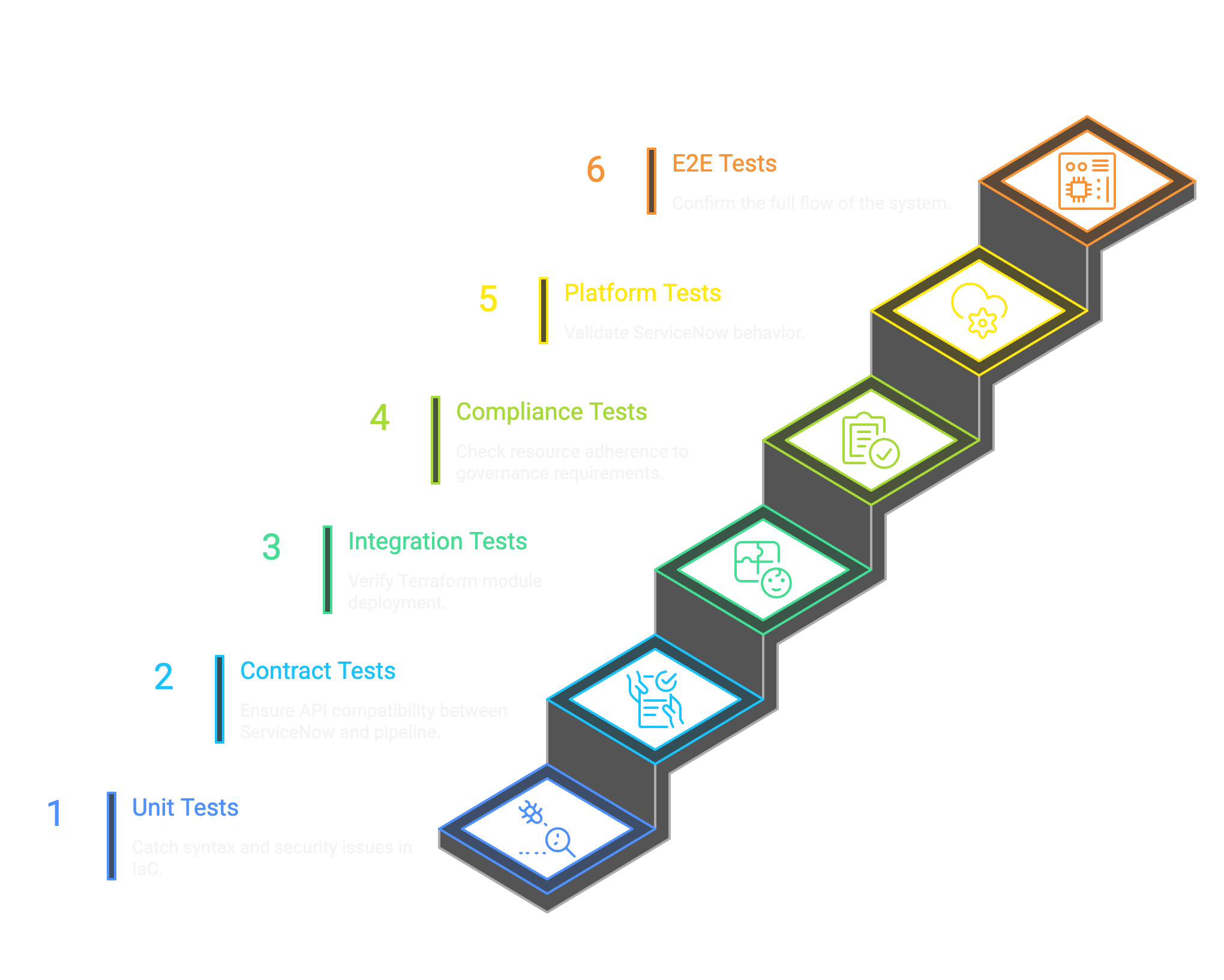

The IaC Testing Pyramid: Catching Infrastructure Bugs Before Production

A practical framework for testing Terraform and Bicep at every level

Infrastructure as Code changed how we deploy. But it didn't automatically change how we test.

Most teams treat IaC testing as an afterthought, if they test at all. The "testing strategy" is to run terraform apply in a dev environment and eyeball the results. Maybe someone reviews the PR. Maybe.

Then something breaks in production. A security group rule that was supposed to block traffic doesn't. A storage account is publicly accessible when it shouldn't be. A naming convention is wrong, and the monitoring dashboards can't find the resource. The kind of bugs that are obvious in hindsight but invisible in a code review.

The fix isn't more careful code reviews. It's a testing strategy that catches different types of errors at different stages. The same pyramid approach that works for application code, adapted for infrastructure.

The conceptual framework still applies

Application testing has mature patterns. Unit tests check individual functions. Integration tests check component interactions. End-to-end tests check user flows. Developers learn this on day one.

Infrastructure testing is murkier. What's a "unit" of infrastructure? How do you test a VNet without deploying it? What does "mocking" mean when your code talks directly to a cloud API?

The good news: the conceptual framework still applies. You want fast, cheap tests at the bottom catching simple errors, and slower, more realistic tests at the top catching integration issues. The specifics are just different.

Fast tests at the bottom, thorough tests at the top

Each level catches different problems:

| Level | What It Catches | Speed | Cost |

|---|---|---|---|

| Static Analysis | Syntax errors, formatting, basic misconfigurations | Seconds | Free |

| Plan Validation | Invalid references, type mismatches, provider errors | Minutes | Free |

| Deployment Tests | Resources that don't actually work when deployed | 10-30 min | Cloud costs |

| Policy/Compliance | Security violations, governance failures | Seconds-minutes | Free |

Static analysis catches the obvious stuff in seconds

This is the base of the pyramid. Fast, cheap, catches the obvious stuff.

For Terraform:

# Syntax validation

terraform validate

# Formatting check

terraform fmt -check

# Linting for best practices

tflint

# Security scanning

tfsec

checkov -d .For Bicep:

# Syntax validation (built into build)

az bicep build --file main.bicep

# Linting

az bicep lint --file main.bicep

# Security scanning

checkov -d . --framework bicepWhat these catch:

- • Syntax errors (missing brackets, typos)

- • Invalid resource types or property names

- • Deprecated features

- • Obvious security issues (public IPs where they shouldn't be, missing encryption flags)

- • Formatting inconsistencies

- • Hardcoded secrets

When to run: On every commit, in pre-commit hooks, and as the first step in CI.

These tests run in seconds. There's no reason not to run them constantly.

Plan validation catches what static analysis misses

Static analysis checks the code in isolation. Plan validation checks what the code would do if applied.

For Terraform:

terraform init

terraform plan -out=tfplan

# Convert to JSON for programmatic checks

terraform show -json tfplan > plan.jsonFor Bicep:

az deployment group what-if \

--resource-group my-rg \

--template-file main.bicep \

--parameters @params.jsonWhat plan validation catches:

- • Resources that reference non-existent dependencies

- • Type mismatches (string where number expected)

- • Provider authentication issues

- • Quota limitations (before you hit them during apply)

- • Drift detection (what's different from current state)

Programmatic plan testing:

The plan output is JSON. You can write assertions against it:

import json

with open('plan.json') as f:

plan = json.load(f)

# Check that all VMs have encryption enabled

for resource in plan['resource_changes']:

if resource['type'] == 'azurerm_linux_virtual_machine':

config = resource['change']['after']

assert config.get('encryption_at_host_enabled') == True, \

f"VM {resource['address']} missing encryption"Tools like terraform-compliance, conftest, and OPA (Open Policy Agent) let you write these checks declaratively:

# policy/vm.rego

deny[msg] {

resource := input.resource_changes[_]

resource.type == "azurerm_linux_virtual_machine"

not resource.change.after.encryption_at_host_enabled

msg := sprintf("VM %v must have encryption enabled", [resource.address])

}When to run: On every PR, as a gate before merge.

Plan validation takes a few minutes but doesn't cost anything. No resources are actually created.

Some bugs only appear when resources actually exist

Some bugs only appear when resources actually exist. The storage account deploys, but the firewall rules don't work. The VM starts, but the startup script fails. The database is created, but the connection string is wrong.

Deployment tests actually create infrastructure, run validations against it, then tear it down.

Frameworks:

- • Terratest (Go): The most mature option. Create resources, run checks, destroy.

- • Kitchen-Terraform: Ruby-based, integrates with InSpec for compliance checks.

- • pytest + subprocess: Roll your own with Python.

Example Terratest pattern:

func TestVmDeployment(t *testing.T) {

terraformOptions := &terraform.Options{

TerraformDir: "../examples/vm",

Vars: map[string]interface{}{

"environment": "test",

},

}

// Deploy

defer terraform.Destroy(t, terraformOptions)

terraform.InitAndApply(t, terraformOptions)

// Validate

vmIP := terraform.Output(t, terraformOptions, "vm_private_ip")

// Check SSH connectivity

host := ssh.Host{

Hostname: vmIP,

SshKeyPair: loadKeyPair(t),

}

ssh.CheckSshConnection(t, host)

// Check that expected software is installed

output := ssh.CheckSshCommand(t, host, "python3 --version")

assert.Contains(t, output, "Python 3")

}What deployment tests catch:

- • Network connectivity issues

- • IAM/RBAC misconfigurations (can the app actually access the database?)

- • Startup script failures

- • Resource dependencies that don't work in practice

- • Cloud provider quirks that aren't documented

When to run: On PRs to main (or nightly). Not on every commit. Too slow and expensive.

Cost management: Use small resource sizes. Set up budget alerts on test subscriptions. Auto-destroy after a timeout in case tests fail mid-run.

Policy tests enforce what the organization requires

This level checks whether your infrastructure meets organizational and regulatory requirements. It can run at multiple stages: pre-deployment (against plans) or post-deployment (against live resources).

Tools:

- • Azure Policy: Built into Azure. Deny non-compliant resources or audit them.

- • Open Policy Agent (OPA): General-purpose policy engine. Write policies in Rego.

- • Checkov: Scans IaC for compliance with CIS benchmarks, SOC2, HIPAA, etc.

- • Sentinel (HashiCorp): Policy as code for Terraform Cloud/Enterprise.

Example Azure Policy (deny public storage accounts):

{

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.Storage/storageAccounts"

},

{

"field": "Microsoft.Storage/storageAccounts/publicNetworkAccess",

"equals": "Enabled"

}

]

},

"then": {

"effect": "deny"

}

}Example OPA policy (require tags):

required_tags := ["CostCenter", "Owner", "Environment"]

deny[msg] {

resource := input.resource_changes[_]

resource.change.actions[_] == "create"

tags := object.get(resource.change.after, "tags", {})

missing := required_tags - object.keys(tags)

count(missing) > 0

msg := sprintf("Resource %v missing required tags: %v", [resource.address, missing])

}When to run:

- • Pre-deployment (against plan): In CI, before apply. Fast, catches issues early.

- • Post-deployment (against live resources): Continuously. Catches drift and manual changes.

Azure Policy is particularly powerful because it can prevent non-compliant resources from being created, even if someone bypasses your CI/CD pipeline and deploys manually.

"We don't have time to write tests"

"We don't have time to write tests."

Start with Level 1. Adding terraform validate, fmt -check, and tfsec to your pipeline takes 15 minutes and catches real bugs. You can add higher levels incrementally.

"Deployment tests are too slow."

They are. That's why they're at the top of the pyramid, not the bottom. Run them nightly or on PRs to main, not on every commit. Use small resource sizes.

"Our IaC is too complex to test."

Complex IaC is exactly what needs testing. If you can't test it, you can't safely change it. Consider breaking it into smaller, testable modules.

"Azure Policy catches everything anyway."

Azure Policy is your last line of defense, not your only line. Catching issues at plan time is faster and less disruptive than having deployments fail because Policy blocked them.

What to run and when

| Level | Tools | Catches | Runs |

|---|---|---|---|

| Static | validate, fmt, tflint, tfsec | Syntax, formatting, obvious issues | Every commit |

| Plan | plan + OPA/conftest | Invalid references, type errors, policy violations | Every PR |

| Deploy | Terratest, Kitchen-Terraform | Real-world failures, connectivity, IAM | Merge/nightly |

| Policy | Azure Policy, continuous scans | Compliance, drift, governance | Continuously |

The bottom line

Fast tests at the bottom. Thorough tests at the top. Compliance everywhere.

Stop hoping your IaC works. Prove it.