Single Error Boundary: A Radical Approach to Debugging AI Systems

Why we use one catch block in the entire codebase, and why it's the most productive debugging approach we've found

Standard advice for production systems: handle errors gracefully. Catch exceptions at every level. Provide fallbacks. Degrade elegantly. Never let the user see a stack trace.

We did the opposite.

One catch block in the entire codebase. Everything else raises. When something breaks, it breaks loudly, with a full stack trace, at a single predictable location.

This sounds reckless. It's actually the most productive debugging approach we've found for AI systems in active development.

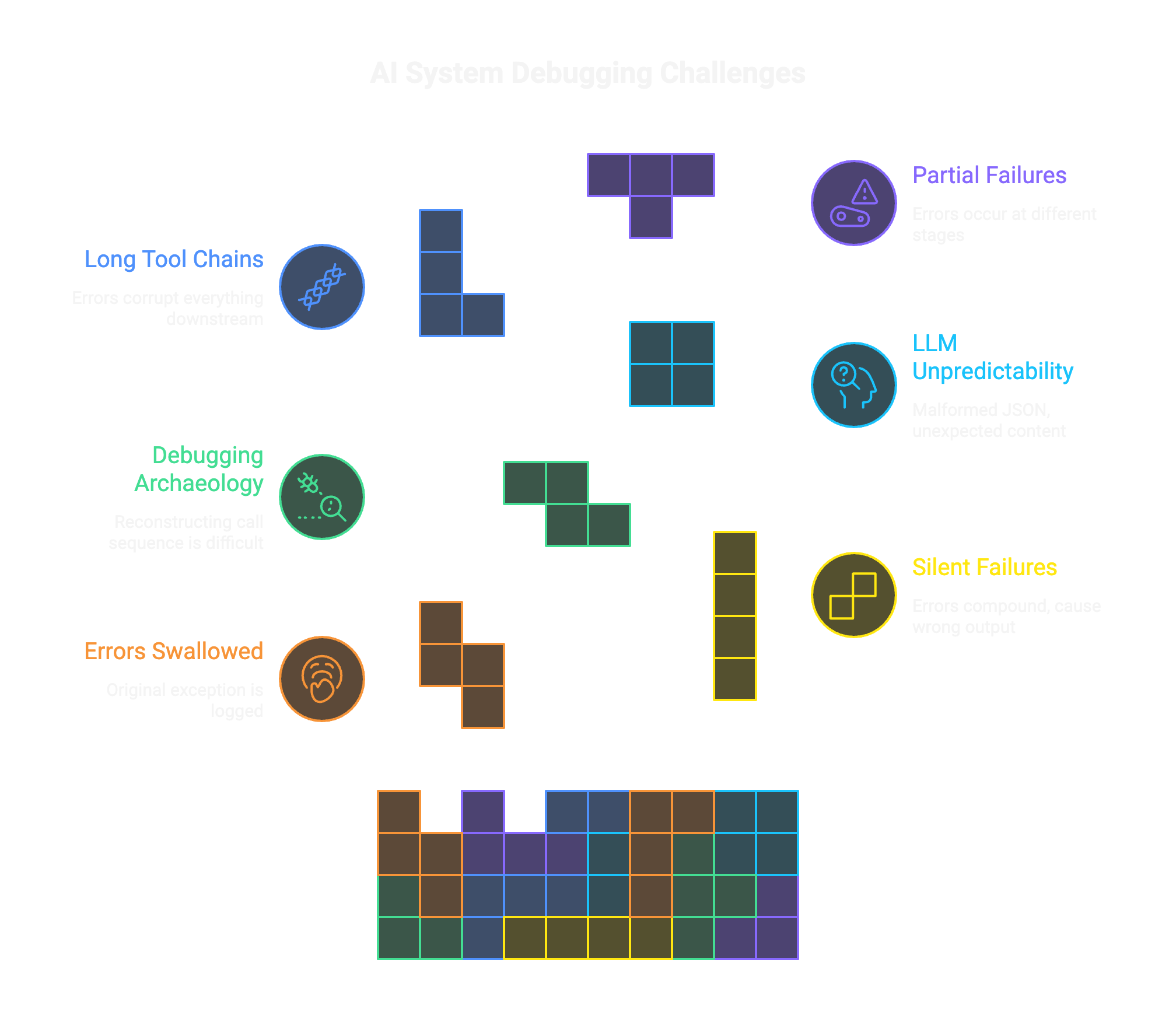

Why standard error handling fails for AI systems

The try/catch-everywhere pattern makes sense for stable systems with well-understood failure modes. For AI systems under active development, it creates a debugging nightmare.

Here's what happens with distributed error handling:

Original error buried in logs. Final output wrong. Nobody knows why.

- Errors get swallowed and logged, not surfaced. The original exception is caught, logged, and converted to a return value. The calling code doesn't know something went wrong.

- Silent failures compound. Tool A fails, returns None. Tool B receives None, does something weird, catches that exception, returns an empty result. Tool C gets empty input. The final output is wrong, but why?

- Debugging becomes archaeology. You grep through log files. You reconstruct the call sequence. You find the original error buried under three layers of "handled gracefully."

AI systems are particularly vulnerable because:

- LLM responses are unpredictable. The model might return malformed JSON, unexpected content, or complete nonsense. You can't anticipate every failure mode.

- Tool chains are long. A typical agentic workflow involves multiple steps: data loading, planning, generation, validation, possibly multiple revisions. Errors anywhere corrupt everything downstream.

- Partial failures are common. The plan is fine but generation fails. Generation succeeds but validation fails. Validation passes but post-processing makes things worse.

When something goes wrong (and with LLMs, things go wrong in surprising ways), you need to know immediately and completely. Not "something failed somewhere, check the logs."

The single boundary pattern

Our approach: one location catches exceptions. Everything else raises.

Tools (all raise)

Services (all raise)

Single Boundary

↓ Convert to ToolResult

↓ Agent reasons about failure

The boundary is in the agent's _act() method, the place where the agent executes a tool. Here's the simplified pattern:

# agent/core.py - THE ONLY TRY/CATCH IN THE SYSTEM

async def _act(self, action: str, parameters: dict) -> ToolResult:

tool = self.tools.get(action)

try:

result = await tool["execute"](parameters, self.context)

return result

except Exception as e:

# Log EVERYTHING

logger.error(f"Tool {action} failed: {e}")

logger.error(traceback.format_exc())

# Convert to structured result the agent can reason about

return ToolResult(

success=False,

error=str(e),

traceback=traceback.format_exc()

)That's it. One boundary. Everything upstream (tools, services, utilities) just raises:

# tools/generate/tool.py - NO TRY/CATCH

async def execute(params: dict, context) -> ToolResult:

response = await context.llm.call(prompt) # Might raise

output = parse_response(response) # Might raise

context.state.save_artifact(output) # Might raise

return ToolResult(success=True, data={"output": output})If llm.call() fails, the exception propagates. If parse_response() fails, the exception propagates. The boundary catches it, logs everything, and converts it to a result the agent can reason about.

What happens when something breaks

Let's trace through a real failure: an external API times out mid-request.

With distributed error handling:

[DEBUG] Processing item 4 of 12 [ERROR] API timeout on item 4, retrying... [ERROR] Retry failed, skipping item [DEBUG] Processing item 5 of 12 ... [INFO] Processing complete [INFO] Output saved to results.json

The result: output with mysterious gaps or corrupted data. The error was "handled." The item was skipped. Nobody noticed until a user reported something was wrong.

With single error boundary:

[ERROR] Tool 'process' failed: HTTPTimeoutError: Request timed out after 30s

[ERROR] Traceback:

File "agent/core.py", line 295, in _act

result = await tool["execute"](parameters, self.context)

File "tools/process/tool.py", line 87, in execute

response = await self._call_api(item)

File "tools/process/tool.py", line 143, in _call_api

return await self.client.request(...)

File "httpx/_client.py", line 412, in request

raise HTTPTimeoutError("Request timed out after 30s")

HTTPTimeoutError: Request timed out after 30sThe result: the agent knows processing failed. It can decide whether to retry, abort, or try a different approach. There's no mysterious partial output. The failure is visible, complete, and actionable.

The debugging experience is completely different. With distributed handling, you're searching logs for clues. With single boundary, the error is right there, complete with the exact line that failed.

The intentional evolution

This isn't permanent architecture. It's phase-appropriate architecture.

Current: Active Development

- Goal: Understand failure modes

- Approach: Visibility over resilience

- Result: Fast debugging, clear understanding

Future: Production Hardening

- Goal: Handle known failure modes

- Approach: Resilience where proven

- Result: Graceful degradation

The key insight: you can't add graceful handling until you understand what fails. And you can't understand what fails if errors are being swallowed and logged.

The trap is adding "resilience" before you understand failure modes. You end up catching errors you don't understand, handling them in ways that seem reasonable but create subtle bugs. Silent failures. Mysterious behaviors. Users reporting bugs you can't reproduce.

We add retry logic for API timeouts once we know they happen. We add fallbacks for specific LLM parsing failures once we've seen them. But we don't add generic try/catch blocks that hide problems we haven't discovered yet.

When to use this pattern

Good fit:

- • Early development of complex systems

- • AI/ML pipelines with many failure modes

- • Systems where silent failures are worse than crashes

- • Teams that need to understand behavior before hardening

Bad fit (for now):

- • User-facing production APIs

- • Well-understood systems with known failure modes

- • Situations where partial results beat no results

The qualifier "for now" is important. As we move toward production, we add targeted error handling. But we add it based on observed failures, not anticipated ones.

The implementation

If you want to adopt this pattern:

- 1. Identify your boundary. Where does the orchestrator call into components? That's where you catch.

- 2. Remove all other try/catch blocks. This feels scary. Do it anyway. Leave only cleanup patterns (try/finally for resource management).

- 3. Log completely at the boundary. Full stack trace. All parameters. Timestamp. Everything you'll need to understand what happened.

- 4. Return structured errors. The orchestrator needs to reason about failures. Give it typed error results, not just boolean success flags.

- 5. Track your failures. Every time you debug something, note the failure mode. When you've seen the same failure multiple times, that's when you add targeted handling.

# The pattern

async def orchestrator_boundary(action, params):

try:

return await execute_action(action, params)

except Exception as e:

log_complete_failure(action, params, e)

return StructuredError(

action=action,

error=str(e),

traceback=traceback.format_exc(),

timestamp=now()

)The deeper lesson

Error handling strategy should match your development phase.

"Fail gracefully" is advice for stable systems with well-understood failure modes. You know what breaks. You know how to handle it. Graceful degradation improves user experience.

But unstable systems (systems under active development, systems where you're still discovering failure modes) need different medicine. Fail loudly. Fail completely. Make failures impossible to miss.

You can always add grace later. You can't retroactively add visibility to failures that were silently swallowed months ago.

We've been running this pattern across multiple AI projects. Every failure we see, we understand completely. We know exactly which API calls timeout, which LLM responses parse incorrectly, which edge cases cause validation failures.

That knowledge is invaluable. And we only have it because we refused to hide failures behind try/catch blocks.

The Bottom Line

This is how we build AI systems: with approaches that match the development phase, not cargo-culted "best practices" that hide problems. If you're building AI systems and struggling with mysterious failures, we should talk.